Promotion

Use code MOM24 for 20% off site wide + free shipping over $45

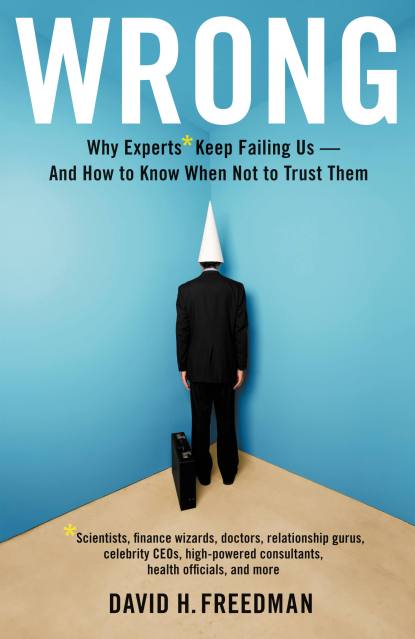

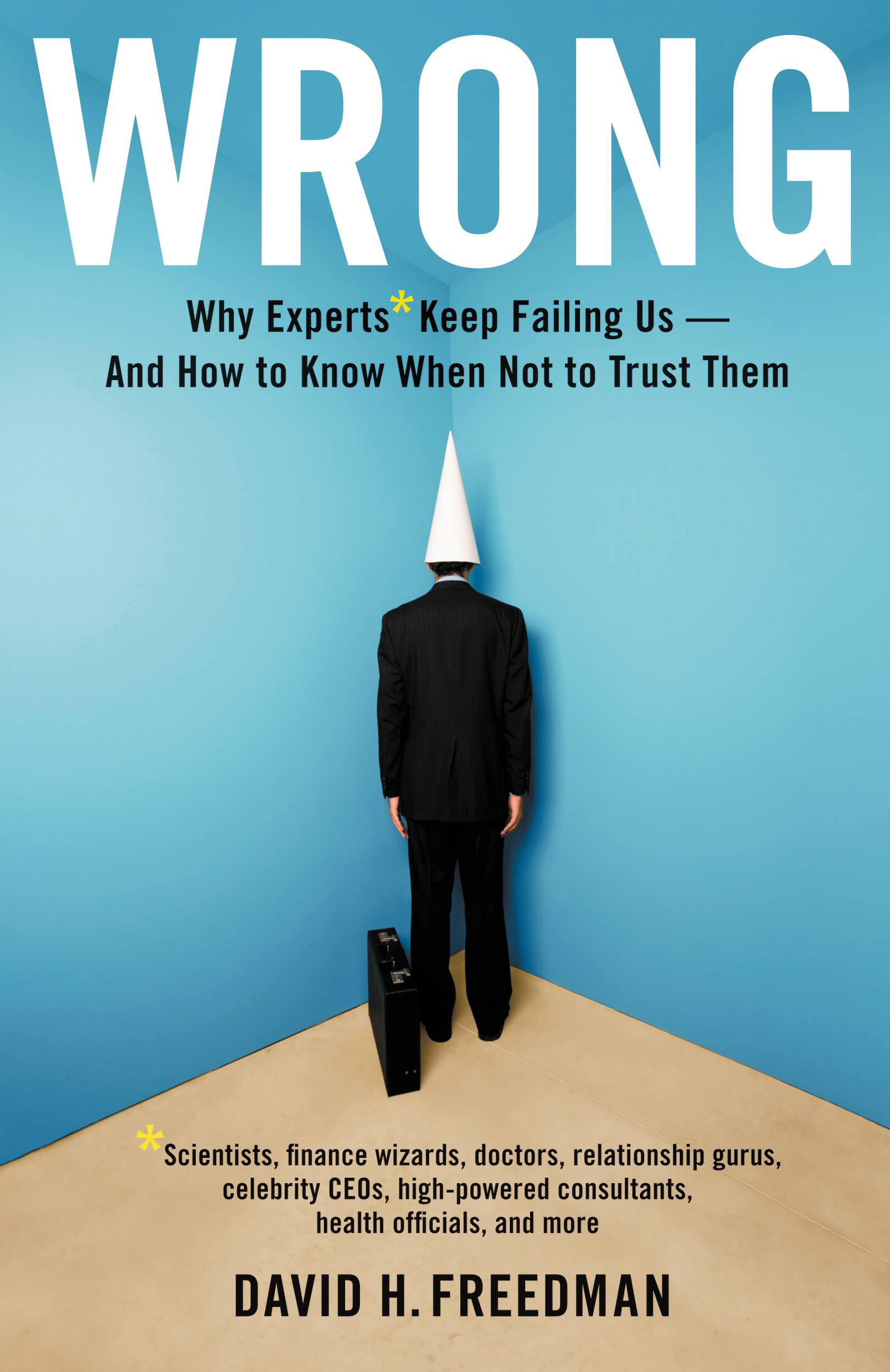

Wrong

Why experts* keep failing us--and how to know when not to trust them *Scientists, finance wizards, doctors, relationship gurus, celebrity CEOs, high-powered consultants, health officials and more

Contributors

Formats and Prices

Price

$13.99Price

$17.99 CADFormat

Format:

- ebook $13.99 $17.99 CAD

- Hardcover $37.00 $47.00 CAD

This item is a preorder. Your payment method will be charged immediately, and the product is expected to ship on or around June 10, 2010. This date is subject to change due to shipping delays beyond our control.

Also available from:

Our investments are devastated, obesity is epidemic, test scores are in decline, blue-chip companies circle the drain, and popular medications turn out to be ineffective and even dangerous. What happened? Didn’t we listen to the scientists, economists and other experts who promised us that if we followed their advice all would be well?

Actually, those experts are a big reason we’re in this mess. And, according to acclaimed business and science writer David H. Freedman, such expert counsel usually turns out to be wrong — often wildly so. Wrong reveals the dangerously distorted ways experts come up with their advice, and why the most heavily flawed conclusions end up getting the most attention-all the more so in the online era.

But there’s hope: Wrong spells out the means by which every individual and organization can do a better job of unearthing the crucial bits of right within a vast avalanche of misleading pronouncements.

Actually, those experts are a big reason we’re in this mess. And, according to acclaimed business and science writer David H. Freedman, such expert counsel usually turns out to be wrong — often wildly so. Wrong reveals the dangerously distorted ways experts come up with their advice, and why the most heavily flawed conclusions end up getting the most attention-all the more so in the online era.

But there’s hope: Wrong spells out the means by which every individual and organization can do a better job of unearthing the crucial bits of right within a vast avalanche of misleading pronouncements.

Genre:

- On Sale

- Jun 10, 2010

- Page Count

- 304 pages

- Publisher

- Little, Brown and Company

- ISBN-13

- 9780316087919

Newsletter Signup

By clicking ‘Sign Up,’ I acknowledge that I have read and agree to Hachette Book Group’s Privacy Policy and Terms of Use