By clicking “Accept,” you agree to the use of cookies and similar technologies on your device as set forth in our Cookie Policy and our Privacy Policy. Please note that certain cookies are essential for this website to function properly and do not require user consent to be deployed.

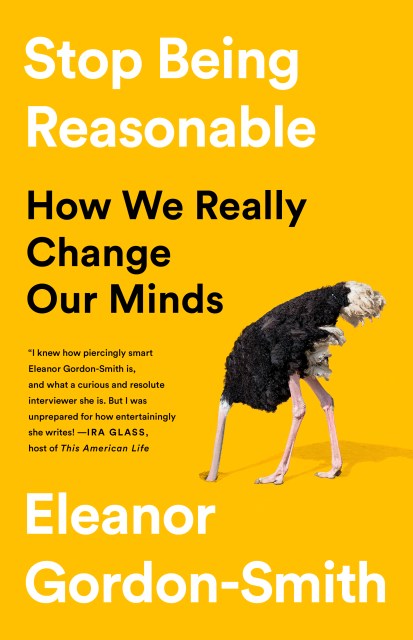

Stop Being Reasonable

How We Really Change Our Minds

Contributors

Formats and Prices

- On Sale

- Oct 22, 2019

- Page Count

- 240 pages

- Publisher

- PublicAffairs

- ISBN-13

- 9781541730441

Price

$26.00Price

$33.00 CADFormat

Format:

- Hardcover $26.00 $33.00 CAD

- ebook $14.99 $19.99 CAD

- Audiobook Download (Unabridged)

This item is a preorder. Your payment method will be charged immediately, and the product is expected to ship on or around October 22, 2019. This date is subject to change due to shipping delays beyond our control.

Buy from Other Retailers:

A thought-provoking exploration of how people really change their minds, and how persuasion is possible.

In Stop Being Reasonable, Eleanor Gordon-Smith weaves a narrative that illustrates the limits of human reason.

In Stop Being Reasonable, Eleanor Gordon-Smith weaves a narrative that illustrates the limits of human reason.

Here, she tells the stories of people who have radically altered their beliefs–from the woman who had to reckon with her husband’s terrible secret to the man who finally left the cult he had been raised in since birth. Gordon-Smith shows how we can change the course of our own lives, and asks: what made someone change course? How should their reversals affect how we think about our own beliefs? And in an increasingly divided world, what do they teach us about how we might change the minds of others?

Inspiring, perceptive, and moving, Stop Being Reasonable explores why resistance to evidence is often rooted in self-preservation and fear, why we feel shame in admitting we are wrong, and why who we believe is often more important than what we believe. This fascinating book will completely change the way you look at the power of persuasion.

-

"I knew how piercingly smart Eleanor Gordon-Smith is, and what a curious and resolute interviewer. But I was unprepared for how entertainingly she writes! I read this with pleasure."Ira Glass, host of This American Life

Newsletter Signup

By clicking ‘Sign Up,’ I acknowledge that I have read and agree to Hachette Book Group’s Privacy Policy and Terms of Use