By clicking “Accept,” you agree to the use of cookies and similar technologies on your device as set forth in our Cookie Policy and our Privacy Policy. Please note that certain cookies are essential for this website to function properly and do not require user consent to be deployed.

Change Is the Only Constant

The Wisdom of Calculus in a Madcap World

Contributors

By Ben Orlin

Formats and Prices

- On Sale

- Oct 8, 2019

- Page Count

- 320 pages

- Publisher

- Black Dog & Leventhal

- ISBN-13

- 9780316509084

Price

$30.00Price

$40.00 CADFormat

Format:

- Hardcover $30.00 $40.00 CAD

- ebook $14.99 $19.99 CAD

- Audiobook Download (Unabridged) $18.99

This item is a preorder. Your payment method will be charged immediately, and the product is expected to ship on or around October 8, 2019. This date is subject to change due to shipping delays beyond our control.

Buy from Other Retailers:

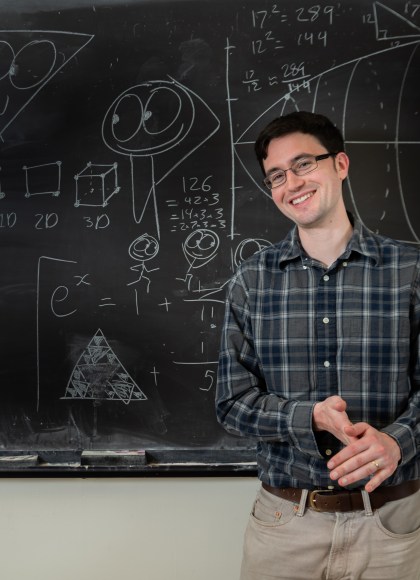

From popular math blogger and author of the underground bestseller Math With Bad Drawings, Change Is The Only Constant is an engaging and eloquent exploration of the intersection between calculus and daily life, complete with Orlin’s sly humor and wonderfully bad drawings.

Genre:

-

"Orlin guides us through the attic of calculus, which is filled not only with mathematical facts, but with true stories, riddles, mathematical fables, and paradoxes. This is the book I wish I had before I'd ever heard what a limit is."Zach Weinersmith, author of the webcomic Saturday Morning Breakfast Cereal

-

"Exploring calculus not with complicated equations, but with stories, tons of illustrations, and (yes!) comics, Change is the Only Constant is an impressively engaging and engrossing read. It's the first -- and so far only -- book of mathematics that I read entirely in a single sitting!"Ryan North, author of How to Invent Everything and Dinosaur Comics

-

"Ben Orlin has written a funny, smart, endlessly engaging book -- that just happens to be about one of the most important and complicated subjects on the planet. If you love math, this book is for you. But if you've ever felt intimidated by math, or you've wondered why you should care about it, then this book is even more for you. (Don't tell the math people I said that.)"David Litt, New York Times bestselling author of Thanks, Obama and Obama speechwriter

-

"In Ben Orlin's delightful treatment, calculus is like a box of chocolates. You never know what you're going to get next -- a poem, a proof, a cartoon, a quip. But despite all the changes, one thing stays constant: It's one tasty morsel after another."Steven Strogatz, professor of mathematics, Cornell University, and author of Infinite Powers

-

"With wit that had me laughing from page one, Change Is the Only Constant describes calculus as a way of thinking about the world, driven by insightful and hilariously illustrated examples drawn not just from the usual suspects, like physics and economics, but from history, poetry, literature, and the thoughts of a corgi at the beach."Grant Sanderson, creator of 3Blue1Brown

-

"All the calculus you never learned, broken up, broken down, illustrated, and friendly. Orlin's storybook telling of the history of math is a treat for your inner geek, and a major gift for your adult mind. A pleasure!"Rebecca Dinerstein, author of The Sunlit Night

-

"The book is a more polished, extensive discussion of the concepts that pepper Orlin's blog, featuring his trademark caustic wit, a refreshingly breezy conversational tone, and of course, lots and lots of bad drawings. It's a great, entertaining read for neophytes and math fans alike because Orlin excels at finding novel ways to connect the math to real-world problems-or in the case of the Death Star, to problems in fictional worlds."Ars Technica, on Math With Bad Drawings

-

"Ben Orlin is terribly bad at drawing. Luckily he's also fantastically clever and charming. His talents have added up to the most glorious, warm, and witty illustrated guide to the irresistible appeal of mathematics."Hannah Fry, mathematician, University College London and BBC presenter, on Math With Bad Drawings

-

"MATH WITH BAD DRAWINGS is a gloriously goofy word-number-and-cartoon fest that drags math out of the classroom and into the sunlight where it belongs. Great for your friend who thinks they hate math - actually, great for everyone!"Jordan Ellenberg, author of How Not To Be Wrong, on Math With Bad Drawings

-

"Brilliant, wide ranging, and irreverent, Math with Bad Drawings adds ha ha to aha. It'll make you smile - plus it might just make you smarter and wiser."Steven Strogatz, Author of The Joy of X, on Math With Bad Drawings

-

"Orlin's ability to masterfully convey interesting and complex mathematical ideas through the whimsy of drawings (that, contrary to the suggestion of the title, are actually not that bad) is unparalleled. This is a great work showing the beauty of mathematics as it relates to our world. This is a must read for anyone who ever thought math isn't fun, or doesn't apply to the world we live in!"John Urschel, mathematician named to Forbes® "30 Under 30" list of outstanding young scientists and former NFL player, on Math With Bad Drawings

-

"[Orlin's] latest cartoon triumph."New Scientist

Newsletter Signup

By clicking ‘Sign Up,’ I acknowledge that I have read and agree to Hachette Book Group’s Privacy Policy and Terms of Use